Bogeyman Economics

In this moment of economic challenge, it can be difficult to keep our problems in perspective. The scale of the financial crisis and the subsequent recession, the weakness of the recovery, the persistence of high unemployment, and the possibility of yet another shock — this time originating in Europe — have left Americans feeling deeply insecure about their economic prospects.

Unfortunately, too many politicians, activists, analysts, and journalists (largely, but not exclusively, on the left) seem determined to feed that insecurity in order to advance an economic agenda badly suited to our actual circumstances. They argue not that a financial crisis pulled the rug out from under our enviably comfortable lives, but rather that our lives were not all that comfortable to begin with. A signal feature of our economy in recent decades, they contend, has been pervasive economic risk — a function not of the ups and downs of the business cycle, but of the very structure of our economic system. According to this view, no American is immune to dreadful economic calamities like income loss, chronic joblessness, unaffordable medical bills, inadequate retirement savings, or crippling debt. Most of us — "the 99%," to borrow the slogan of the Occupy Wall Street protestors — cannot escape the insecurity fomented by an economy geared to the needs of the wealthy few. Misery is not a marginal risk on the horizon: It is an ever-present danger, and was even before the recession.

But compelling though this narrative may be to headline writers, it is fundamentally wrong as a description of America's economy both before and after the recession. When analyzed correctly, the available data belie the notions that this degree of economic risk pervades American life and that our circumstances today are significantly more precarious than they were in the past. Even as we slog through what are likely to be years of lower-than-normal growth and higher-than-normal unemployment, most Americans will be only marginally worse off than they were in past downturns.

The story of pervasive and overwhelming risk is not just inaccurate, it is dangerous to our actual economic prospects. This systematic exaggeration of our economic insecurity saps the confidence of consumers, businesses, and investors — hindering an already sluggish recovery from the Great Recession. It also leads to misdirected policies that are too zealous and too broad, overextending our political and economic systems. The result is that it has become much more difficult to solve the specific problems that do cry out for resolution, and to help those Americans who really have fallen behind.

Only by moving beyond this misleading exaggeration, carefully reviewing the realities of economic risk in America, and restoring a sense of calm and perspective to our approach to economic policymaking can we find constructive solutions to our real economic problems.

INCOME AND EMPLOYMENT

Perhaps the broadest measure of economic insecurity is the risk of losing a job or experiencing a significant drop in income. And the idea that this risk has been increasing dramatically in America over the past few decades has been absolutely central to the narrative of insecurity. It has fed into a false nostalgia for a bygone age of stability, one allegedly supplanted (since at least the 1980s) by an era of uncertainty and displacement. President Obama offered a version of this story in his 2011 State of the Union address:

Many people watching tonight can probably remember a time when finding a good job meant showing up at a nearby factory or a business downtown. You didn't always need a degree, and your competition was pretty much limited to your neighbors. If you worked hard, chances are you'd have a job for life, with a decent paycheck and good benefits and the occasional promotion. Maybe you'd even have the pride of seeing your kids work at the same company.

That world has changed. And for many, the change has been painful. I've seen it in the shuttered windows of once booming factories, and the vacant storefronts on once busy Main Streets. I've heard it in the frustrations of Americans who've seen their paychecks dwindle or their jobs disappear — proud men and women who feel like the rules have been changed in the middle of the game.

The tales of both this fabled golden age and the dramatic rise in the risk of declining incomes and job loss are, to put it mildly, overstated. But this popular story did not originate with President Obama: It has been a common theme of left-leaning scholars and activists for many years.

The clearest recent example of this trope may be found in the popular 2006 book The Great Risk Shift, by Yale political scientist Jacob Hacker. Hacker's fundamental argument was that economic uncertainty has been growing dramatically since the 1970s, leaving America's broad middle class subject to enormous risk. Using models based on income data, he argued that income volatility tripled between 1974 and 2002; the rise, he claimed, was particularly dramatic during the early part of this period, as volatility in the early 1990s was 3.5 times higher than it had been in the early 1970s. Hacker's conclusion — that the middle class has, in recent decades, been subjected to horrendous risks and pressures — quickly became the conventional wisdom among many politicians, activists, and commentators. As a result, it has come to define the way many people understand the American economy.

But that conclusion turned out to be the product of a serious technical error. Attempting to replicate Hacker's work in the course of my own research, I discovered that his initial results were highly sensitive to year-to-year changes in the small number of families reporting very low incomes (annual incomes of under $1,000, which must be considered highly suspect). Hacker was forced to revise the figures in the paperback edition of his book. Nevertheless, he again overstated the increase over time by reporting his results as a percentage change in dollars squared (that is, raised to the second power) rather than in dollars and by displaying his results in a chart that stretched out the rise over time. While he still showed an increase in volatility of 95%, my results using the same basic methodology indicated an increase of about 10%.

In July 2010, a group led by Hacker published new estimates purporting to show that the fraction of Americans experiencing a large drop in income rose from about 10% in 1985 to 18% in 2009. But the 2009 estimate was a rough projection, and would have been a large increase — up from less than 12% in 2007. Then, in November 2011, an updated report (produced by a reconfigured team of researchers led by Hacker) abandoned the previous year's estimates and argued that the risk of a large income shock rose from 13% or 14% in 1986 to 19% in 2009.

Where did these assertions come from? The team's 2010 claim was again based on a failure to adequately address the problem of unreported income in the data. Their November 2011 claim, meanwhile, used a different data set that was much less appropriate for looking at income loss (because it does not identify the same person in different years, does not follow people who move from their homes, and suffers greatly from the problem of unreported income).

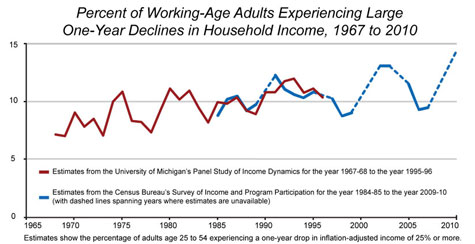

In fact, the most reliable data regarding income volatility in recent decades (including the data used by Hacker in his early work and in 2010) suggest a great deal of stability when analyzed correctly. The chart below shows the portion of working-age adults who, in any given year, experienced a 25% decline in inflation-adjusted household income (a common definition of a large income drop, and the basis for Hacker's recent estimates). Viewed over the past four decades, this portion has increased only slightly, even though it has risen and fallen within that period in response to the business cycle.

Even when one accounts for the cyclical fluctuations — by comparing only business-cycle peak years — the claims of dramatically increased volatility simply don't stand up. During the peak year between 1968 and '69, for example, roughly 7% of adults experienced a large drop in income. Nearly 30 years later, between 2006 and '07, that number was 9% — hardly a staggering increase in risk. Through 2007, the risk was never higher than 13%; even with the onset of the Great Recession, though the risk increased to a 40-year high, it remained below 15%. Once the economy recovers, there is no reason to believe that the portion of Americans experiencing significant income drops will be above 9% or 10%.

Even if the risk of a large income drop has not increased appreciably in recent decades, it may still seem uncomfortably high to some. It is important to remember, however, that some of these drops represent planned, voluntary decisions — such as when a parent leaves the work force to care for a child, when an older worker scales back his hours in preparation for full retirement, or when a person goes back to school to increase his earning potential later on. Other drops reflect the demise of marriages and other relationships, which certainly presents an economic risk, though not one that says much about jobs, wages, or work among the broader population.

A clearer picture of true labor-market risk can be formed by examining measures of joblessness and job security, rather than wage declines. While the research is somewhat ambiguous, job turnover has probably increased since the mid-1970s. For example, the share of male private-sector workers with less than one year of tenure increased from about 9.5% to about 12.5% from 1973 to 2006. But that development would be worrisome only if it reflected greater involuntary turnover. After all, a strong labor market offers more opportunity for workers to switch to better jobs, so rising turnover need not indicate greater economic insecurity.

Labor-department data that disentangle "quits" from layoffs are available on a continuous basis only for the past decade. What they show, however, is that, from 2001 through 2007, roughly six in ten job separations not caused by retirement, death, or transfer were initiated by employees rather than by employers. Even at the recent economic low point of 2009, the figure was 44%; in 2010, the most recent year available, fully half of job separations were initiated by employees. This trend has followed the business cycle, falling as unemployment has risen and rebounding as employment has improved.

These employer-reported numbers can be compared with worker-reported figures collected by the Census Bureau in 1984, 1986, and 1993 to assess the longer-term trend. As in the recent surveys, in those years, approximately six in ten separations involved workers quitting jobs on their own. Furthermore, a 2002 labor-department report showed that, between the mid-1970s and the early 2000s, job separations grew no more common — but they were increasingly likely to be followed by very short unemployment spells, rather than by long ones. This suggests that more separations have involved job-switching by workers who already have new jobs lined up when they leave their old ones.

Quits and layoffs are not the only sources of joblessness. Examining only these figures overlooks new entrants to the labor force and re-entrants — who start out unemployed, instead of becoming unemployed because they've quit or been laid off. But even when one accounts for people in this category, the argument for declining job security does not hold up. The overall unemployment rate in fact experienced a long-term decline from the early 1980s through 2007, and that conclusion holds if one relies instead on the various alternative measures published by Department of Labor to account for shortcomings of the official measure. The same decline occurred in the fraction of the unemployed who lost their jobs involuntarily and in the share of workers who were employed part time but wanted to be working full time. Not only did these point-in-time "snapshot" jobless measures improve, but the share of adults experiencing any bout of unemployment over a full calendar year also fell.

Critics who are nonetheless convinced that Americans' economic prospects grew increasingly weak prior to the financial crisis often point to the sizable increase in the number of people who, because they are not looking for work, are not counted as unemployed. For instance, the share of men between the ages of 25 and 54 who were not working in a typical week rose from 5% in 1968 to 12% in 2007, while the rate of unemployment among men in a typical week officially rose only from 1.7% to 3.7%.

But relatively few of today's non-workers indicate that they actually want jobs. In 2007, for example, around 85% of working-age men who were out of the labor force said they did not want to work. Although an exact comparison is not possible (because the wording of the questionnaire was changed), the available data suggest similar figures going back to the late 1960s. When those men who did want to work but were not looking for jobs are added back into the employment numbers, the figures suggest a modest rise in joblessness between 1968 and 2007, from about 3% to about 6%.

The economic doomsayers also like to point out that the duration of unemployment spells has increased. While true, these figures are generally cited without proper context. For instance, from 1989 to 2006, the portion of unemployed Americans who, at any given time, had been out of work for 27 weeks or more did increase — from 10% to 18%. And yet the share of the entire labor force that, at any given time, had been out of work for at least 27 weeks was less than 1% in both years. The increase therefore involves an extremely small number of people, and hardly represents a broader trend across the economy.

These oft-cited figures are misleading for another reason. Like the official unemployment rate, they describe joblessness in a typical week based on snapshots taken once a month and then averaged across the year. That makes the chronically unemployed seem like a bigger group at any point in time than they are when all spells of unemployment are taken into account. From 2001 to 2003, for instance, at any point in time, 17% of the unemployed had been out of work for at least 27 weeks. But Congressional Budget Office analyses suggest that, of all the people working or looking for work during those three years, only 4% experienced spells this long.

Before leaving the question of job security, it is worth acknowledging the extent to which the job situation has deteriorated since 2007. Unemployment, long-term unemployment, involuntary part-time work, and labor-force dropout have all spiked. Perhaps most alarming, the number of job-seekers for every job opening jumped from 1.5 in early 2007 to 7.0 in early 2009. Between 2008 and 2010, it is likely that one in three workers experienced some unemployment. And yet, even though that figure highlights the severity of the recent recession and the weakness of the ongoing recovery, it is easy to give it greater gravity than is justified without additional context. After all, from 1996 to 1998 — a period of strong economic growth — more than one in five workers faced a bout of unemployment.

Given our current situation, it is also worth noting that, even in a recession, a great deal of unemployment is short-lived. During the last recession, from 2001 to 2003, 40% of jobless spells were over within two weeks. Even in this difficult time, long-term unemployment remains rare. In all likelihood, since 2008, no more than one in ten workers has suffered an unemployment spell as long as 27 weeks. And to the extent that such prolonged jobless spells are more likely in the current climate, they are surely caused, in part, by the availability of extended unemployment benefits, which allow people who are out of work the flexibility to hold out for better opportunities even if a suboptimal job becomes available.

To be sure, being unemployed — especially for a long time — is a stressful situation under the best of circumstances, and can be catastrophic under the worst. But it is possible to be concerned for the plight of the catastrophically unemployed without having to advance the fictional notion that every American faces catastrophic unemployment risks. Indeed, even in the recent downturn, very few Americans faced serious unemployment risks: The difference between the healthy economy of 1996-1998 and the worst recession in two generations is the difference between a 10% chance of being unemployed in a year and a 15% chance. Of course, as a proportion, that risk increased significantly; in absolute terms, however, it remains rather low.

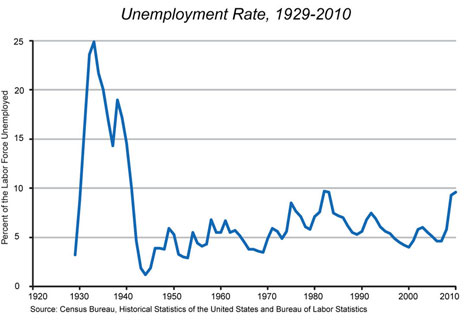

It is also low when measured by the yardstick of history. Only the rare 90- or 100-year-old, possessing memories of the Great Depression, knows what pervasive job insecurity looks like first-hand. The historical data indicate that today's employment situation is instead in keeping with what the vast majority of Americans have experienced during their lifetimes: As the chart above shows, over the past half-century — even during serious recessions — unemployment rates have actually remained within a fairly narrow (and low) band.

None of this is to suggest that today's unemployment problem is not grave, or that it does not call for bold policy remedies. The point, rather, is that the false charges that the risk of income or job loss is utterly pervasive — and that this risk represents the extension of a long-term trend of swiftly growing insecurity and instability — make it more difficult to craft proper responses to the very real, and very serious, problems that we do face.

RETIREMENT SECURITY

Another key component of the popular economic fiction — both the fairy tales about an idealized past and the horror stories about today's out-of-control risk — is the supposed decline in Americans' retirement security. In the good old days wistfully recalled by many observers, most workers had generous retirement benefits the likes of which we can only dream of today. But in the course of the past few decades, the narrative goes, such benefits have been stripped away — leaving too many seniors unable to retire comfortably. As President Obama put it during his 2008 campaign, "A secure retirement is no longer a guarantee for the middle class. It's harder to save and harder to retire. Pensions are getting crunched."

That claim, too, turns out to be wrong. In 1950, around 25% of private wage and salary workers participated in a pension plan. In 1975, that number was 45%. How badly did things deteriorate over the subsequent quarter-century? In 1999, the participation rate stood at 50% — an increase over the supposed golden age. As it happens, these years are the first and last available for this labor-department series, and they compare a peak-unemployment year in the business cycle (1975) to a low-unemployment year (1999). Nevertheless, the 1975 participation rate was no higher than the rate in the comparable year of 1992, and more recent data from other sources show that the 2009 participation rate was basically the same.

Maybe the benefits became less generous over time? Not according to the data available from the Department of Labor, which show that the inflation-adjusted costs of private employers' contributions to workers' retirements and savings rose by about 50% between 1991 and 2010 — a rate of increase that mirrored the growth in employers' spending on health insurance.

If retirement benefits did not become more rare over time, and if employer spending on them did not become more stingy, perhaps risk has nonetheless been shifted to employees through changes in the nature of retirement benefits? The doomsayers point out that, rather than having traditional pensions — in which employers promise a fixed monthly payout (a "defined benefit") for the duration of retirement — Americans increasingly have 401(k) plans and other "defined contribution" retirement accounts, in which employers put a fixed amount toward retirement savings but take no responsibility for the adequacy of benefits in old age. In 1975, 87% of workers with retirement plans had traditional pensions; by 1999, that figure had declined to 42%, and has since fallen a few more percentage points.

The truth, however, undermines both the notion that most workers once had defined-benefit pensions and the idea that today's defined-contribution pensions are necessarily inferior. Traditional pensions were not as pervasive as many nostalgic commentators believe: At their peak in the 1970s, such plans covered only four in ten workers. Nor were the pensions as generous as their champions claim. After all, the benefit amount in a traditional defined-benefit pension is determined by an employee's pay at the end of his tenure with the firm and by the length of time he was there. Workers with only a few years at their firms who made little when they left could thus expect only meager pensions. Further cutting into the traditional plans' generosity is the fact that, in a defined-benefit system, companies can also elect to "freeze" the growth of their pension plans — limiting the additional benefit increases that go along with additional tenure or pay (as many companies have done since the onset of the recession). Perhaps most troubling, defined benefits do not mean guaranteed benefits: A company may go belly up, in which case workers will likely see only a portion of those "promised" benefits.

Moreover, where traditional pensions have been generous, they have often proved unsustainable. As the Baby Boomers have aged, many employers have found that their earlier commitments were too generous. In many unionized firms, younger workers have ended up paying for the pensions of their predecessors by receiving reduced compensation themselves. The era of defined-benefit pensions certainly did not benefit them. Nor will it benefit public workers who see lower wages — or the clients of public programs that see lower funding — because state and local governments have under-funded their own defined-benefit pension commitments to the next wave of retirees.

Traditional pensions are particularly inappropriate in a world in which job tenure, by consent of employers and employees alike, has declined. It is not at all clear that a worker who can expect to change jobs several times over a career should prefer a traditional pension to a defined-contribution plan that he can take with him. And even though defined-contribution plans shift the risk of poor investment decisions onto employees, they offer, as the flip side of that risk, the opportunity for workers to craft investment strategies more closely tailored to their own preferences for future income. In a recent paper, economist James Poterba of the Massachusetts Institute of Technology and his colleagues determined through simulations that most workers would be better off in retirement under defined-contribution plans than with defined-benefit pensions.

Furthermore, because defined-contribution plans are voluntary, they allow an employer's work force to essentially vote on how much of their compensation they wish to defer until retirement. If more immediate concerns are of higher priority — be it paying bills or saving to put children through college — and participation is relatively low, then the firm can instead pay out what it would have spent toward retirement costs as wages and salaries. If employees are more concerned about retirement, they can choose to have the firm direct more of their compensation to retirement by, say, taking maximum advantage of a 401(k) matching program. This sort of optimization is more difficult under a defined-benefit arrangement in which the commitment to a pension for everyone who meets the vesting requirements produces a balance between current and deferred compensation that may not match workers' preferences.

Moreover, when workers put the investment risk for retirement savings onto their employers — as in a defined-benefit pension — the result is lower pay or benefits, in order to compensate the employer for bearing that risk. In a defined-contribution set-up, however, the absence of a risk premium allows for higher compensation. A final advantage is that defined-contribution plans also constitute a private pre-retirement safety net: Subject to tax penalties and other disincentives, vested funds — including those put in by one's employer — may be pulled out in an emergency, thus reducing rather than increasing economic risk.

Plan configurations aside, the evidence simply does not uphold the claim that Americans' retirement security has been steadily eroding. Indeed, studies from before the recession that compared recent retirees (or people near retirement) with similar groups in the past found that the recent cohorts were as prepared for retirement as the earlier ones, and as wealthy (or wealthier) in retirement. The same holds true for studies comparing the savings of current workers to those of past workers. For example, in 1983, the median American aged 47 to 64 had roughly $350,000 in wealth, including future public and private pensions; in 2007, the median was around $450,000 (both figures in 2007 dollars).

Clearly, the Great Recession has altered this picture for some of today's older workers. Americans who were near retirement and whose savings were heavily exposed to stock markets — or older workers who were counting on real-estate wealth to finance their retirements — have taken a hit. But it appears that the impact has not been so severe as to affect the retirement decisions of many people. At first, Pew Research Center surveys conducted in 2009 and 2010 would seem to indicate otherwise, finding that 35% to 38% of working adults aged 62 or older said they had had to delay retirement "because of the recession." But Census Bureau figures suggest that, of all the 62- to 64-year-olds working in 2009, only 5% would not have been working if 2007 conditions had prevailed. (In 2009, 61% of adults aged 62 to 64 were working, up from 58% in 2007; the difference, divided by the 2009 share, is 4.6% of the 2009 level.) The Census Bureau number is much lower than the Pew figures, suggesting that most respondents were mistaken and would actually have been working even had the recession not occurred.

Furthermore, it must be noted that sexagenarians have been increasingly likely to work since the mid-2000s. Thus the small increase in older Americans working from 2007 to 2009 cannot be attributed to the recession alone. Nor should the past decade's increase in the number of sexagenarians working be taken as evidence that Americans simply must work longer because they lack security in retirement. After all, that increase hardly erases a long-term decline. Sixty years ago, the typical worker retired at age 70; this year, he will retire at age 63.

On the whole, the financial condition of today's seniors is at least as good as that of earlier generations. This is not to say, of course, that some elderly Americans are not at greater risk, or that some have not been hit hard by the recession. It does, however, indicate that those problems — as with so many others that have been exaggerated by the purveyors of economic gloom — should be understood within a broader context of stability and security.

DEBT AND BANKRUPTCY

Beyond income and retirement, one of the most common themes of the doomsayers is that there has been an increase in individual and family debt in America. These days, they argue, the broad middle class is never far from the precipice of bankruptcy. Indeed, in The Great Risk Shift, Jacob Hacker declared that "[p]ersonal bankruptcy has gone from a rare occurrence to a relatively common one." Hacker cites research from Harvard professor (and now U.S. Senate candidate) Elizabeth Warren, whose figures show bankruptcy filings growing from 290,000 in 1980 to more than 2 million in 2005.

Even if we took these numbers at face value, they would not support Hacker's claim; rather, they would show that non-business bankruptcies never reached 2% as a share of households in that period. (Less than 2% is certainly an unusual definition of "relatively common.") And we should not simply take Warren's numbers at face value. The trouble with using bankruptcy filings as stand-ins for indebtedness trends is that filing rates can change for reasons other than hardship. For example, in the lead-up to the 2005 federal legislation that tightened eligibility for bankruptcy, filings rose significantly. After the bill was implemented, however, they plummeted. Furthermore, the stigma associated with bankruptcy can change over time, as can the intensity of marketing by firms specializing in bankruptcy litigation. Assessing the extent of over-indebtedness and changes in debt over time requires measures that are less affected by such distortions.

If one uses more objective measures, the tale of growing risk and mounting debt begins to crumble. True, the share of families with any debt at all has drifted upward — from 72% in 1989 to 77% in 2007, according to the Federal Reserve Board's Survey of Consumer Finances. And among families with debt, the median amount of debt was nearly three times higher in 2007 than it was in 1989. But rising debt over this period is only half the story, because assets also rose. In fact, median net worth — assets minus debt — rose by 60% from 1989 to 2007, according to the same source. Some of the rise was illusory — a result of the housing bubble — but the median family was still 22% wealthier in 2009 (after the housing crash and in the midst of the recession) than it had been 20 years earlier.

Taking assets into account also contextualizes the much-discussed rise in student-loan debt. There is little consistent data going back very far, but Federal Reserve Board figures show that, among families with a head of household under age 35, the share of households with education-related debt doubled from 17% to 34% between 1989 and 2007. Among all households with education-related debt, the median amount rose from $4,800 in 1989 to $13,000 in 2007 (adjusted for inflation). But assets rose as well, offsetting the increase in student-loan debt. While she could not examine education debt specifically, economist Ngina Chiteji looked at an earlier Federal Reserve Board survey and found that, as a fraction of net worth, debt among households headed by a 25- to 34-year-old was at the same level in 2001 as it was in 1963. That is a devastating rejoinder to the cottage industry of writers claiming that today's young adults are uniquely burdened.

Furthermore, none of these figures accounts for the intangible asset — the human capital — that is financed by student loans. For instance, the Census Bureau estimates that, among non-Hispanic white men, having a college degree rather than just a high-school diploma translates into an additional $1.1 million in earnings between the ages of 25 and 64. That benefit is more than 50 times the student-loan debt of the median new graduate in 2008. And this lifetime earnings premium has risen over the years — not nearly as much in percentage terms as student-debt loads, but much more in absolute terms.

As for housing debt, foreclosure rates did indeed climb during the recent recession and weak recovery — more than quadrupling between 2005 and 2010, according to the Mortgage Bankers Association. But even at the end of 2010, when the foreclosure rate was at an all-time high, only 4.6% of mortgages were in the foreclosure process. That translates into just over 3% of homes, since three in ten homeowners do not have mortgages. This does not mean that we should be unconcerned that one out of 33 homeowners is losing his home (and if you include those more than 90 days delinquent on their mortgage payments, the figure is just above one in ten). The point is only that even a large proportional increase in the risk of losing one's home need not provoke anxiety in the typical American family, since it remains a rare event.

Credit-card debt, which unlike mortgage debt is not secured by an asset, can be much more problematic. And yet even credit-card debt has not dramatically increased over time. The share of families with credit-card debt rose from 40% to 46% between 1989 and 2007, and the median amount of such debt among these families increased from $1,400 to $3,000 in inflation-adjusted dollars. But in both 1989 and 2007, a majority of Americans had no credit-card debt whatsoever — meaning that median credit-card debt among all families was actually $0 in both years.

Thus, just as with income and retirement savings, claims about personal indebtedness are seriously exaggerated. Here, too, the trends over recent decades do not support the notion that the bottom is dropping out from beneath America's middle class — or that economic risk has exploded.

HEALTH SECURITY

The final, and in some respects the most commonly cited, area in which economic risk is said to have grown dramatically is health care. Indeed, the claim that growing numbers of Americans face catastrophic health-care costs was a crucial justification for the Patient Protection and Affordable Care Act. If the law remains in place, the Congressional Budget Office estimates that it will increase the portion of Americans with health insurance to 93% by 2021 (from 84% before the law's enactment). It thus represents a concrete example of how concern about extreme economic risk can translate into a complete restructuring of a major sector of the economy.

But was the concern justified? There are certainly many Americans who lack health insurance, and compassion is an appropriate response to the hardship faced by people who cannot afford the health care they need. Reform advocates, however, consciously appealed not to humanitarianism but rather to self-interest in making their case. Instead of arguing that the country needed to help a particular disadvantaged subset of the population, these advocates resorted to scare tactics, claiming that the great majority of Americans faced the risk of catastrophic costs. Health insecurity was asserted to be the norm across the middle class; the problem was said to be growing incessantly. And because these claims were grossly exaggerated, they produced a similarly outsized policy solution — one far more interventionist, disruptive, and costly than it should have been.

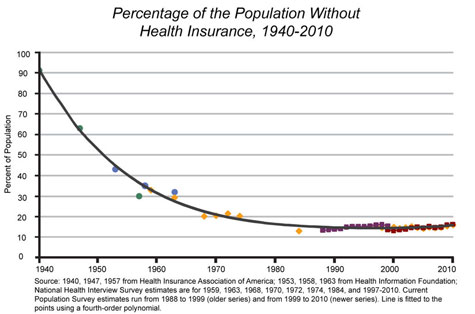

The truth of our health-care situation is far less dire than the Affordable Care Act's champions would have the nation believe. According to the Census Bureau, the share of the population without health insurance increased from 12% in 1987 to 16% in 2010 (the first and last years for which census estimates are available). The National Center for Health Statistics indicates that the decline in private coverage among the non-elderly has been steeper, falling from 79% in 1980 to 61% in 2010. But a simple chart — which, using a variety of sources, pieces together estimates of the fraction of the population uninsured through the decades — puts these recent reversals in stark perspective.

The chart reveals that the health security of Americans has not deteriorated rapidly, as critics claim; rather, our dramatic progress in extending coverage over the decades has petered out. Still, even saying that progress has just slowed overstates the matter considerably — because it overlooks the gains that have been made in the scope of coverage, as health insurance among those who have it has become much more comprehensive. In recent decades, the share of private expenditures on personal health care that has been covered by insurers (rather than paid for directly out of consumers' pockets) has risen sharply — from 51% in 1980 to 70% in 2009. This is particularly true of expenditures on prescription drugs, dental care, home health care, and mental-health services, all of which are covered much more generously today than they were in decades past.

As these covered benefits have expanded — and as the cost of providing health care has climbed — the cost of insurance has obviously increased. Those who would paint a grim economic picture claim that employers have responded to these growing expenses simply by shifting the costs to workers. This transferred burden, the critics argue, shows up as increases in deductibles, co-payments, and other forms of cost-sharing.

The data, however, suggest otherwise. In recent decades, employers have more or less maintained their share of their employees' health-insurance bills. According to National Health Expenditure data made available by the Department of Health and Human Services, public and private workers paid 23% of employer-based insurance premiums in 1987 (the earliest year available) and 28% in 2009.

Of course, some employers have responded to health-care inflation by dropping coverage altogether, or by choosing not to offer it in the first place. But many of those Americans no longer covered by private insurers have been caught by the public sector, particularly through expansions of Medicaid in the late 1980s and the creation of the State Children's Health Insurance Program in 1997. It is also likely that the expansion of public coverage actually led to some of the decline in private coverage. This phenomenon is known as "crowd out," in which employers find themselves with reduced incentives to offer coverage, since there is a public alternative for some workers and their families. Similarly, state and federal policies mandating that insurers cover specific services may also have contributed to declines in employer coverage by raising the cost of insurance.

Even with the changes caused by climbing costs, spells without health coverage have not grown appreciably in the past 30 years. Over a 28-month period from 1985 to 1987, 28% of Americans lacked health insurance in at least one month, according to the Census Bureau. The chances were lower — 27% over a 32-month period — between 1991 and 1993, and again between 1996 and 1999 (when 32% were uninsured for at least a month over a longer 48-month period). A study by the group Third Way found that 23% of working-age adults lacked health coverage in at least one of the 48 months between 2003 and 2007; compared to the same age group in the census figures, this works out to roughly the same percentage as in the shorter 1985-87 time frame. The Third Way study also undermined the claim that health insecurity plagues the broad middle class: Among the three-fourths of working-age adults who lived in households with at least $35,000 in annual income, around 85% were covered consistently throughout 2004-2007.

None of this is to say that health-reform advocates have been wrong to be concerned about the 50 million Americans who do lack coverage. But they have worried far too much about the 250 million people who have coverage, and so have proposed (and enacted) overreaching solutions that are poorly suited to the real problem at hand. This is precisely the danger posed by the exaggerated narrative of economic risk.

POLITICS BY HORROR STORY

Because we have yet to crawl out of the worst downturn since the Great Depression, many Americans are willing to believe every negative description of our economic circumstances, no matter how inflated. But it is important to remember that the economic doomsayers did not originate with the recession. They were active in the 1980s, claiming that de-industrialization would be the death knell of the middle class and that Germany and Japan were destined to leave us in the dust. Their claims were prevalent through most of the 1990s, when outsourcing was said to foreshadow disaster for American workers in the midst of a "white-collar recession" (which subsequent research showed had not hit professionals nearly as hard as popular accounts suggested). And the gloom was apparent in the pre-recession 2000s, in all the talk of jobless recoveries, "stagnating" living standards, and families running to stand still.

The unfortunate irony is that, in proclaiming economic doom, these Cassandras may do more to harm the truly disadvantaged than to help them. Economist Benjamin Friedman and sociologist William Julius Wilson have independently argued that societies are more generous to those in need when economic times are good. It is not clear why convincing the broad middle class that it is teetering on the brink should be more likely to inspire solidarity than to inspire tight-fistedness and hoarding.

The horror stories harm workers in other ways as well. In good times, scaring workers may make them less willing to demand more from their employers in the way of compensation or better working conditions. Former Federal Reserve chairman Alan Greenspan famously cited job insecurity as a primary reason for low inflation (and therefore low wage growth) in the late 1990s; on the left, former labor secretary and American Prospect co-founder Robert Reich concurred.

In bad times, meanwhile, scaring workers (as well as consumers, investors, and entrepreneurs) only delays recovery. Digging out of our current hole will require businesses to create jobs, which will happen only when consumers start feeling comfortable enough to spend money again and when investors start feeling comfortable enough to take risks again. We do not have to whitewash the seriousness of economic conditions to talk more hopefully (and accurately) about the enduring strengths of our economy.

Exaggerating economic problems has political costs, too. It is hard to read Confidence Men, journalist Ron Suskind's recent account of the first two years of economic policymaking in the Obama administration, without concluding that health-care reform distracted the president and his advisors from thinking about how to shore up the flagging economy. Even now, the huge political lift — and fiscal cost — of the Affordable Care Act has made it vastly more difficult to enact policies truly capable of fostering economic growth.

Such effective economic policies and reforms will need to build on our economy's strengths in order to address its weaknesses. By badly distorting our understanding of both, the bogeyman narrative adopted by too many analysts, activists, and politicians makes it more difficult to help those Americans who do face very real hardships and dangers. If we are to effectively confront the fiscal and economic challenges of the 21st century, we will need to begin by seeing things as they really are.