The AI Era in Computing

The rise of artificial intelligence has generated drastically different predictions about its effects on our economy and society. What many of them have in common is a sense that AI signifies a sharp break with the history of computing technology.

Many analysts assume that break will be dark. A classic example is AI researcher Eliezer Yudkowsky, who warned in 2023 that if "somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter." Others assume the break will bring about dramatic improvements in the human condition. Elon Musk, to take one example, asserted this summer that "[t]he path to solving hunger, disease, and poverty is AI and robotics."

In time, predictions of a sharp disjunction in the human story driven by AI could prove correct. But to understand what this technology means in our time, and where it may be taking us, we must consider the ways in which its development is continuous with the history of computing, and how this continuity relates to economics and our culture.

ERAS OF COMPUTING

Computing has evolved through distinct eras, each marked by revolutionary advances in hardware that enabled humans to interact with machines in fundamentally new ways. This progression follows a consistent pattern: More powerful hardware makes possible more intuitive interfaces, which in turn opens computing to broader populations and entirely new applications. Understanding this pattern helps us recognize that large language models (LLMs) — AI models such as OpenAI's ChatGPT or Anthropic's Claude that are trained on enormous datasets of text and code — represent not AI per se, but rather the latest step in computing's steady march toward more natural human-machine interaction.

The first computing era began during World War II, with room-sized machines built from vacuum tubes. Early computers such as the ENIAC required users to physically manipulate switches and rewire circuits to program them. The interface was the machine itself; programming meant understanding the hardware at the most fundamental level. Only a handful of specialists could operate these systems, limiting their use to essential military calculations and basic scientific research. The hardware was revolutionary for its time, but it remained fragile, used enormous amounts of power, and was accessible only to experts at large institutions.

The invention of the transistor in 1947 launched the second era. Transistors were smaller, more reliable, and consumed far less power than vacuum tubes, enabling computer engineers to construct more sophisticated machines. This hardware advance made it possible to develop machine-language and assembly-language programming. While still requiring deep technical knowledge, these programming languages represented the first layer of abstraction between human thought and machine operation. Programmers no longer needed to physically rewire computers; they could write instructions using symbolic representations of machine operations. This modest step toward more intuitive interfaces expanded the pool of potential users beyond electrical engineers to include mathematicians and scientists.

The mainframe era arrived in the 1960s with the development of integrated circuits, which packed multiple transistors onto single chips. This leap in hardware density and reliability enabled computer engineers to develop time-sharing systems and higher-level programming languages such as BASIC, FORTRAN, and COBOL. For the first time, multiple users could interact with a single computer simultaneously, and programming languages began to resemble something closer to human language. COBOL, designed for business applications, could be read almost like English sentences. These advances made computing accessible to government agencies managing large bureaucracies and financial institutions processing vast numbers of transactions. The political and economic effects were profound: Mainframes enabled the creation of the modern administrative state and the complex financial systems that underpin contemporary economies.

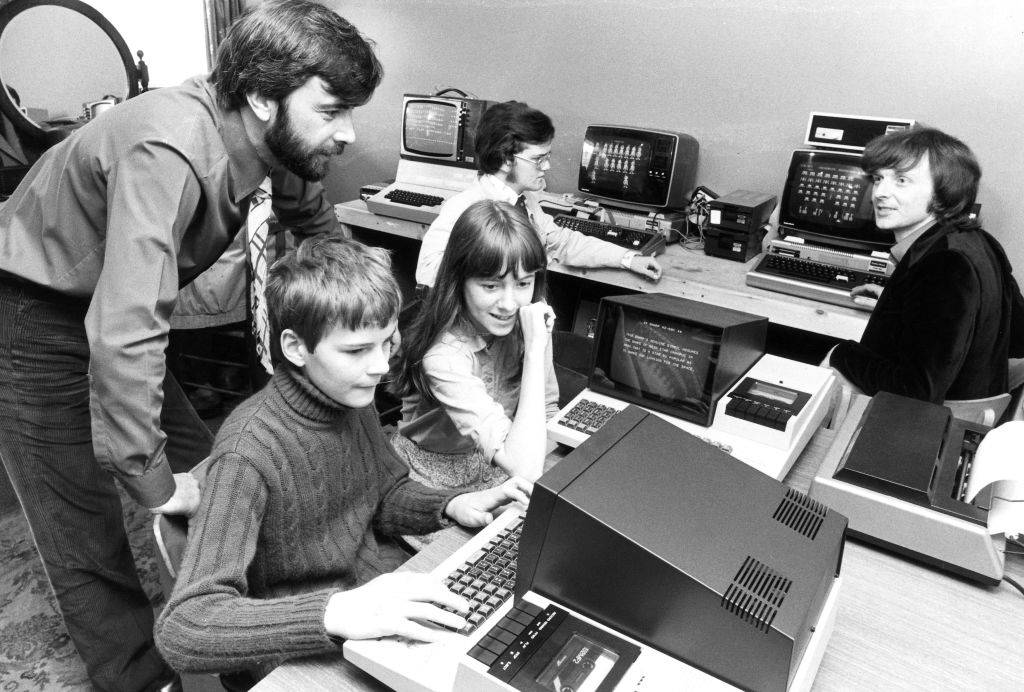

The invention of the microprocessor, which integrated an entire computer's processing power into a single chip, launched the personal-computer revolution of the 1970s and 1980s. This hardware breakthrough, following Moore's Law that computing power would double every two years, enabled engineers to produce machines small and affordable enough for individuals to own. It also made possible graphical user interfaces (GUIs) — windows, icons, menus, and pointers — that transformed computing from a text-based expert domain into something approaching everyday usability. Suddenly, users could interact with computers using familiar metaphors: Files looked like folders, deletion involved dragging items to trash cans, and operations could be initiated with simple clicks rather than memorized commands.

Personal computers democratized computing power, bringing it into homes and small businesses for the first time. This development transformed the American economy. Small businesses could now afford sophisticated accounting, inventory-management, and word-processing capabilities previously available only to large corporations. The productivity gains from computerization dramatically improved efficiency in industries like agriculture and manufacturing. As computers automated routine tasks in these sectors, demand for their products rose more slowly than productivity, leading to a fundamental shift in employment patterns. Workers moved from farms and factories into service industries, particularly those involving information processing and human interaction.

The internet era emerged from advances in networking technology and continued improvements in processing power and storage capacity. The World Wide Web, developed in the early 1990s, created a new interface paradigm — hypertext links and web browsers — that gave users access to vast amounts of information through simple point-and-click navigation. The smartphone amplified this interface revolution by combining powerful mobile processors with touchscreen interfaces to put computing power into billions of pockets worldwide.

The internet's importance extended far beyond individual productivity; it enabled electronic commerce, transforming the retail sector and creating global supply chains of unprecedented complexity and efficiency. Online shopping connected buyers and sellers across vast distances, reducing transaction costs and expanding market reach. Smartphones augmented these effects while enabling entirely new forms of social interaction through social-media platforms. The constant flow of information and the ability to connect instantly with others around the world proved culturally disruptive, changing how people form relationships, consume news, and understand their place in society.

Throughout these transitions, the economic pattern remained consistent: Computerization drove productivity growth in goods-producing industries faster than demand rose for those goods, leading to relative price declines and employment shifts toward services. Agriculture and manufacturing became increasingly efficient but employed fewer people as a share of the workforce. At the same time, demand in sectors such as health care and education increased faster than productivity improvements, causing their costs to rise relative to the goods provided and creating ongoing economic pressures.

Now we stand at the threshold of a new era, enabled by the latest hardware revolution: the development of specialized processors optimized for parallel computation, particularly graphics-processing units and purpose-built AI chips. These advances, combined with vast increases in data-storage and network capacity, have made it possible to train LLMs on unprecedented scales. The result is not AI as science fiction imagined it, but rather the next logical step in the evolution of human-computer interfaces.

LLMs represent the culmination of computing's decades-long trend toward more natural interaction. Instead of learning specialized programming languages, menu systems, or even point-and-click interfaces, users can now communicate with computers using ordinary human language. As AI researcher Andrej Karpathy put it, "the hottest new programming language is English." This represents perhaps the most dramatic interface revolution since the GUI, making computing accessible to anyone who can speak or write.

More intuitive interfaces will likely expand the user base and enable new applications we can barely imagine today. Just as the GUI made possible desktop publishing and multi-media applications, and the web enabled e-commerce and social media, natural-language interfaces might unlock entirely new categories of human-computer collaboration. The question is not whether this will happen, but what economic and social transformations will follow — and how we can manage them while promoting human dignity and flourishing.

THE ADJACENT POSSIBLE AND CREATIVITY

The most remarkable aspect of LLMs is not their ability to simulate intelligence, but their capacity to amplify human creativity at unprecedented scales. By understanding natural language, these models have transformed from tools that require specialized expertise to collaborative partners that can work alongside anyone who can articulate ideas. The results are nothing short of revolutionary: Software engineers report significant productivity gains, writers are producing books in weeks rather than months or years, and scientists are discovering new materials and medicines at rates that would have been impossible even a few years ago.

The secret lies in what scientists call "the adjacent possible" — the realm of what's just within reach, the next logical combinations or steps that become conceivable once certain building blocks are in place. Human creativity has always operated in this space. The Beatles' early hits emerged from combining the harmonies of the Everly Brothers with the cadences of Chuck Berry's rhythm and blues. The iPhone represented the adjacent possible of touchscreen technology, mobile processors, and internet connectivity. Each creative breakthrough builds on existing elements, recombining them in ways that seem obvious only in retrospect.

LLMs have dramatically expanded what lies in the adjacent possible for creative professionals. Consider the software engineer who can now sketch out a complex application in natural language and watch as the AI generates working code, complete with error handling and optimization. What once required months of painstaking programming can now be accomplished in hours. The productivity gains are so dramatic that some developers describe the experience as having a team of junior programmers working at superhuman speed, never tiring, never making transcription errors, and always available to iterate on ideas. Unlike a human team of coders, in which each additional person makes communication more difficult (to the point where the cost of keeping some employees up to speed exceeds the benefit of the work they do), a team of one software engineer working with Claude or ChatGPT only has to discuss project requirements with a single AI partner.

This productivity revolution extends across creative disciplines. I know a writer of teen fiction who now feeds plot outlines and character descriptions into AI systems and produces finished books in a fraction of the time previously required. The AI doesn't replace her creativity — it amplifies it. She remains the architect of the story, the judge of quality, the source of emotional resonance. But the AI handles the mechanical aspects of generating prose, allowing her to focus on higher-level creative decisions and produce more work than ever before. I myself was able to craft this essay much faster with the help of AI.

The adjacent possible has expanded into other creative territories. Scientists are using AI to explore previously unthinkable possibilities in drug discovery and materials science. Companies like Atomwise and Iambic Therapeutics are using AI to identify promising drug candidates in months rather than years, with some reporting development costs reduced to as little as 10% of conventional approaches. Insilico Medicine achieved a remarkable milestone by taking an AI-designed drug from concept to Phase I clinical trials in under 30 months, a timeline that would have been impossible using traditional methods.

In materials science, the revolution is equally profound. Google DeepMind's GNoME system has predicted the properties of hundreds of thousands of potential new materials, with researchers making the most stable forecasts available through open databases. Materials scientists can now generate millions of new molecular structures, check their viability, predict their properties, and propose synthesis pathways that are cost effective — all tasks that would have required enormous teams working for years using conventional approaches.

The creative applications have continued to multiply in entertainment and other art forms. Musicians are experimenting with AI-generated compositions, though not without controversy. The brief viral success of Velvet Sundown — a band that used the AI music-generation platform Suno to create songs that attracted more than 500,000 monthly listeners on Spotify — demonstrates both the potential and the complications of AI-assisted creativity. Visual artists are using AI to explore new creative possibilities that would have been impossible to generate manually. Video creators are using AI to create backgrounds, produce special effects, and even generate entire scenes.

What distinguishes these applications from mere automation is their collaborative nature. The most successful creative professionals using AI aren't being replaced by machines — they're being amplified by them. The fiction writer still crafts compelling characters and plot structures. The software engineer still designs system architectures and makes crucial technical decisions. The scientist still formulates hypotheses and interprets results. The AI serves as a sophisticated tool that can execute ideas rapidly, explore variations exhaustively, and handle routine tasks with superhuman efficiency.

This collaborative model points to a fundamental shift in how creative work is done. Instead of spending time on mechanical execution, creative professionals can focus on the uniquely human aspects of their work: conceptual thinking, aesthetic judgment, emotional resonance, and strategic decision-making. The AI handles the "grunt work" of implementation, freeing humans to operate at higher levels of abstraction and creativity.

The key to success in this new paradigm lies in what I call "meta-instruction" — the ability to articulate how the author is crafting the artifact. For example, an author might prompt AI with the phrase, "this character lacks self-awareness, so some of her own behavior comes to her as a surprise." Meta-instruction can guide an AI to produce work that matches the author's style and standards. An average writer with excellent meta-instruction skills can become spectacularly productive, while a talented writer who cannot articulate how he writes might struggle to achieve useful results from AI systems.

The implications extend beyond individual productivity gains. We might be witnessing the emergence of entirely new creative forms that were previously impossible. Interactive educational experiences represent just one example. We can imagine AI-powered creative tools that enable real-time collaboration between human creativity and machine capability, generating new forms of art, entertainment, and communication that neither humans nor machines could produce alone.

A question still looms over this creative revolution, however: Will AI remain a complement to human creativity, or will it eventually substitute for it? If creativity truly involves exploring the adjacent possible, AI systems might eventually match or exceed human creative capabilities. After all, they can explore vastly more combinations, consider more variables, and iterate more rapidly than human creators. They don't suffer creative blocks or fatigue. As these systems become more sophisticated, the line between AI-assisted creativity and AI-generated creativity might blur beyond recognition.

This possibility raises profound questions about the nature of creativity itself. If an AI system can generate a compelling novel, a beautiful painting, or a breakthrough scientific hypothesis, what does that say about human uniqueness? The answer might be that creativity has always been about intelligent recombination of existing elements. If this is the case, machines might indeed become as creative as humans — or perhaps even more so.

Others point to the risk that LLMs will provide only counterfeit creativity, also known as "deep fakes." Many observers fear that the models will flood the online world with "slop," crowding out genuine creativity.

For now, we are experiencing the early stages of a creative revolution that promises to make human imagination more productive and powerful than ever before. The adjacent possible has never been larger, and the tools to explore it have never been more accessible. The question is not whether this will transform creative work — it already has. The challenge instead is how quickly we can adapt to take advantage of these new capabilities, and how we can maintain the uniquely human elements of thought, judgment, and emotional expression that make creativity meaningful in the first place.

ECONOMIC GAINS AND DISPLACEMENT

The economic impact of any technological revolution lies not in its novelty, but in its capacity to reshape production. The new era in computing faces this test today, and the stakes could not be higher.

For decades, the American economy has been caught in a productivity paradox: The sectors that employ the most people — health care and education — have proven remarkably resistant to the efficiency gains that transformed agriculture and manufacturing. If LLMs can finally crack this productivity puzzle, we might witness economic transformation on a scale not seen since the Industrial Revolution. But such achievements would come with profound displacement, forcing us to confront fundamental questions about what kinds of work will remain valuable in an age of AI.

This pattern of advances and displacement reflects what economist Joseph Schumpeter called "creative destruction" — the process by which technological improvements simultaneously create new value and destroy old ways of doing things. The Industrial Revolution followed this script. In 1870, around half of American workers labored on farms. By 1970, that figure had fallen to less than 5%, as mechanization made agriculture dramatically more productive while requiring far fewer hands. Workers displaced from farms found new opportunities in factories, where the growing manufacturing sector absorbed millions of laborers who produced the goods that rising productivity in agriculture had made affordable for ordinary families.

But manufacturing, too, eventually fell victim to its own success. Since World War II, manufacturing productivity has risen faster than demand for manufactured goods, leading to a steady decline in manufacturing's share of employment. In the 1950s, manufacturing accounted for over a quarter of private-sector American jobs. Today, that figure has fallen below 10%, even as manufacturing output has increased by more than 80% since the 1980s. The pattern is unmistakable: When productivity increases faster than demand, employment shrinks even as total output expands.

Meanwhile, employment has shifted dramatically toward services, particularly those sectors where demand has escalated faster than productivity gains can accommodate. This shift becomes clear when examining how different industries have claimed larger shares of the American economy in the past half-century. The numbers tell a striking story of economic rebalancing driven by differential productivity growth.

Health care represents the most dramatic example of this transformation. In 1970, the industry accounted for approximately 7.3% of America's GDP — a figure roughly comparable to that of other developed nations. But while health-care spending in other countries stabilized or rose slowly in the decades since, American health-care costs experienced an extraordinary expansion. By 2023, health care represented 17.6% of GDP, with spending projected to reach above 20% by 2033.

Education has followed a similar trajectory, though the expansion is less pronounced. Government spending on education, which captures most but not all educational investment, has increased from roughly 3% of GDP in 1950 to around 6% today. When private educational spending is included, total educational investment likely approaches 8% of GDP. When combined, health care and education now account for roughly one-quarter of the entire American economy — a historically unprecedented concentration of economic activity in sectors that have proven largely immune to productivity improvements.

But the benefits of this resource shift into health care and education can seem elusive. Despite spending far more per capita on health care than any other developed nation, Americans experience shorter life expectancies and worse health outcomes than citizens of countries that spend half as much. Similarly, despite dramatic increases in per-pupil spending in recent decades, Americans' educational achievement has remained largely stagnant, with student performance on standardized tests showing little improvement since the 1970s.

The fundamental problem is that health care and education have remained stubbornly labor intensive, while costs have risen faster than productivity gains. A doctor today sees roughly the same number of patients per day as a physician 50 years ago despite having access to electronic health records, advanced diagnostic tools, and telemedicine capabilities. A teacher today instructs roughly the same number of students as an educator in 1970 despite having access to computers, interactive whiteboards, and online-learning platforms. Meanwhile, a single farmer today feeds over a hundred families, and one factory worker produces goods that would have required dozens of employees in previous generations.

Health care and education are thus ripe for disruption, and early indicators suggest that LLMs might finally provide the productivity breakthrough that these sectors have long resisted. In health care, we are beginning to see promising applications in both medical research and clinical practice. As discussed above, AI systems are accelerating drug discovery at unprecedented rates, with companies like Insilico Medicine reducing development timelines from decades to months and cutting costs by as much as 90%. Materials-science research has similarly accelerated: AI systems are now predicting the properties of hundreds of thousands of new compounds that could revolutionize medical devices and treatments. These research productivity gains could multiply over time, leading to better treatments that are developed faster and at lower cost.

Also promising are the applications for medical diagnosis and treatment. Microsoft's recent study of AI diagnostic capabilities provides a tantalizing glimpse of this potential. According to the study, the company's AI system correctly diagnosed 85% of complex medical cases featured in the New England Journal of Medicine — cases specifically chosen to challenge human physicians. Human doctors achieved a 20% accuracy rate on the same cases. Remarkably, the AI system accomplished this superior performance while spending 20% less on expensive diagnostic tests. If such results can be replicated and scaled, they suggest AI could simultaneously improve medical outcomes while reducing costs — the productivity breakthrough that has eluded health care for decades.

In education, the transformative potential is equally compelling, though perhaps further from realization. Technologists have long dreamed of highly personalized AI tutors capable of adapting to each student's learning style, pace, and interests — something approaching the "Young Lady's Illustrated Primer" depicted in Neal Stephenson's science-fiction classic The Diamond Age. Current AI tutoring systems fall far short of this vision, lacking the sophistication and adaptability that would make them truly effective teaching companions. Experiments such as Alpha School in Austin, Texas, however, offer tantalizing indicators of what might be possible.

The school claims its AI-powered learning platform enables students to master academic material 2.6 times faster than traditional approaches, completing standard curricula in roughly two hours per day rather than six or seven. Students reportedly advance through multiple grade levels per year while maintaining high comprehension and retention. If true and scalable, such approaches could revolutionize educational productivity, allowing students to acquire the same knowledge in a fraction of the time while freeing up resources for other activities.

Significant questions remain, however, about whether these results are replicable and scalable. Alpha School serves a highly selective student population and features unusually favorable teacher-to-student ratios. Moreover, Alpha School does not use generative AI in the way most people understand it, relying instead on more traditional adaptive-learning software combined with human instruction and extrinsic motivation, with the latter being particularly controversial with educators. Whether this approach can work under less favorable conditions and with truly generative AI systems remains to be proven.

We can hope that the conversational interface that is now possible will enable computers to keep students motivated and provide timely and appropriate feedback. But skeptics can point to the adverse impacts of smart phones or the failures of Zoom-based education during the pandemic and question whether technology represents the solution or the problem for human learning.

If the early signs of promise prove accurate and scalable, the economic implications would be profound. Health care and education together employ roughly 35 million Americans — more than one in five workers. A genuine productivity revolution in these sectors could dramatically improve outcomes while requiring far fewer workers. We might finally achieve what Americans have long sought: health-care and education services that are high quality, broadly accessible, and reasonably priced.

Such transformation, however, would displace workers on a scale not seen since the decline of agricultural employment. Millions of health-care workers, teachers, and educational administrators could find their jobs eliminated or fundamentally altered. Unlike previous waves of technological displacement, which moved workers from declining sectors to booming ones, this destabilization would occur in the industries that have been absorbing those employees for decades.

If health care and education become significantly more productive but also shed millions of jobs, what other sectors could absorb these displaced workers? Historical precedents offer limited guidance, especially because prior technological revolutions created new industries that did not previously exist. The automobile industry has employed millions who never worked with horses. Computers created entirely new categories of work that could not have been imagined in the pre-digital era.

Contemporary research on automation suggests several possibilities. Personal services that require human interaction and creativity might expand as people have more disposable income from cheaper health care and education. Environmental projects could become much more widespread as society prioritizes sustainability and climate adaptation. Entertainment, hospitality, and experience-based industries might absorb significant numbers of workers as people seek meaningful ways to spend time and money freed up by the more efficient provision of essential services.

But these sectors themselves might also face productivity pressures from AI systems. If LLMs can write compelling stories, compose music, and create visual art, how much human labor will entertainment industries require? If AI systems can provide personalized travel recommendations and customer service, how many hospitality workers will be needed? The challenge is not just finding new sectors for displaced workers, but identifying industries that will remain labor intensive in an age of increasingly capable AI.

For this reason, the economic transformation ahead might be more profound than previous technological revolutions. Rather than simply shifting workers from one sector to another, we might be approaching a world where human labor becomes less central to economic production across most industries. This possibility forces us to confront fundamental questions about how economic value is created and distributed when machines can perform an ever-expanding range of human tasks.

For now, these remain speculative scenarios. The productivity gains in health care and education are still in the early stages and unproven at scale. Many previous technologies that promised to revolutionize these sectors — from television-based education in the 1960s to computer-assisted instruction in the 1980s to online learning in the 2000s — ultimately delivered far less than their advocates promised.

But the early indicators suggest this time might be different. The combination of natural-language processing, vast knowledge bases, and adaptive-learning algorithms represents a qualitatively different technological toolkit than that of previous innovations. For the first time, we have technologies that can engage in sophisticated reasoning, provide personalized instruction, and handle complex problem-solving tasks that previously required extensive human training. Applying these technologies to health care and education might finally solve the cost crises that have made such services increasingly unaffordable for ordinary families. But we will also need to grapple with displacement on a scale that could dwarf previous technological transitions.

The question is not whether such transformation will be beneficial — better health care and education at lower cost would obviously improve human welfare. Instead, we must consider how to manage the transition in ways that broadly distribute the benefits while providing support for those whose economic roles are diminished or eliminated. The new era in computing might finally deliver the productivity gains we have long sought, but it will test our ability to ensure that technological progress serves human flourishing rather than mere economic efficiency.

BARRIERS TO AGI AND THE COMPUTER TAKEOVER

AI's social and economic transformations will likely be profound, but there are also impediments to how the technology can advance. Indeed, there are significant barriers that separate current LLMs from artificial general intelligence (AGI) — an AI that achieves something like human consciousness — that both enthusiasts and doomsayers envision. These obstacles are not mere technical puzzles waiting to be solved, but fundamental challenges that might require decades to overcome — if they can be overcome at all.

The first barrier is one that has historically constrained every general-purpose technology: It takes time, often generations, for humans to adapt and fully exploit new capabilities. As the saying based on Max Planck's work on scientific progress goes, science advances one funeral at a time. The same principle applies to technological adoption in the broader economy.

Consider the electric motor, invented in the 1830s but not widely adopted in manufacturing until nearly eight decades later in the 1910s. Economic historians Paul David and Gavin Wright documented how this delay reflected not just technological limitations, but the time required for entrepreneurs and engineers to discover how to reorganize production around the new technology. Early factories simply replaced their central steam engines with electric motors, maintaining the same shaft-and-belt systems that distributed power throughout the facility. Only gradually did manufacturers realize that electric motors could be distributed throughout the factory, allowing for flexible layouts and more efficient production processes.

Similarly, economist Zvi Griliches famously studied the diffusion of hybrid corn varieties in American agriculture during the 1930s and 1940s. Despite clear evidence that hybrid seeds dramatically outperformed traditional varieties, adoption followed a slow S-curve that took decades to complete. Griliches showed that this wasn't due to irrational behavior — farmers needed time to learn about the new technology, observe its performance in local conditions, and adapt their farming practices. Each region had different soil types, weather patterns, and economic constraints that affected the optimal timing of adoption.

The internet provides a more recent example. In the mid-1990s, many business executives responded to email by having their secretaries print out messages for them to read and respond to on paper. The executives understood that email was potentially useful, but they hadn't yet reorganized their work habits to take advantage of its capabilities. Only as a new generation of workers — people who had grown up with computers — entered the labor force did organizations begin to unlock the internet's true potential for transforming business operations.

The same pattern will likely constrain AI adoption. While "AI native" younger workers might adapt more quickly to using AI systems, most organizations remain structured around human-centered workflows. Managers need to learn how to delegate tasks to AI systems, how to verify their output, and how to integrate AI-generated work with human expertise. These are not just technical challenges, but organizational and cultural ones that might take decades to fully resolve.

The second barrier involves the challenge of operating in the physical world. In principle, the ability to program computers using natural language should enable rapid progress in robotics. LLMs will make it easier to convey instructions to direct robotic systems to perform physical tasks. But key challenges remain.

At the heart of robotics development lies a paradox: Tasks that are easy for humans often seem next to impossible for machines, while problems that appear impossible for humans can be trivial for computers. A computer can calculate grandmaster-level chess moves in milliseconds, but picking up a chess piece from a physical board remains a complex engineering challenge. The simple act of grasping an object requires precise control of force and position, real-time sensory feedback, and the ability to adapt to variations in object shape, weight, and surface texture.

The physical world is vastly more complex and unpredictable than the digital environments where AI systems excel. In software, the rules are clearly defined and consistent. When a program encounters a specific input, it will always produce the same output. But in the physical world, no two situations are exactly alike. A robot attempting to fold laundry must deal with fabrics of different weights and stiffness, clothes that might be damp or wrinkled, and lighting conditions that affect its vision systems. These variations require a level of adaptability that current systems struggle to achieve.

Despite massive investments in robotics research, progress remains slow. Autonomous vehicles, which operate in the relatively structured environment of roads with painted lines and traffic signals, have required decades of development and still struggle with edge cases like construction zones or unusual weather conditions. Household robots remain largely limited to simple tasks like vacuuming floors or mowing lawns — activities that can be performed through systematic coverage of a defined area rather than complex manipulation of objects.

The robotics industry itself acknowledges these limitations. Current development focuses on "collaborative robots" designed to work alongside humans rather than replace them entirely, and in highly constrained environments such as factory assembly lines where conditions can be carefully controlled. Even the most advanced humanoid robots showcased at conferences remain largely demonstration projects rather than practical tools ready for widespread deployment.

The third barrier concerns the complexity of tasks that AI systems can reliably handle. Current discussions of AI "agents" often focus on relatively simple examples — systems that can book flights, schedule meetings, or answer customer-service inquiries. While these capabilities are promising, they represent a narrow slice of what would be required for AGI.

Several examples illustrate this limitation. Customer-service chatbots work well for common inquiries, for example, but require human escalation for complex problems. AI coding assistants can generate functions and fix bugs but need human oversight for software-architecture decisions. Writing assistants can draft content but must be complemented by human judgment about audience, tone, and strategic messaging.

Recent research on AI agents' capabilities confirms these limitations. Studies measuring AI systems' ability to complete long, complex tasks show that current models can handle projects lasting hours but struggle with assignments that would take humans days or weeks to complete. Even specialized agents designed for specific domains achieve only modest success rates on challenging benchmarks — around 14% success on complex web-based tasks compared to 78% for humans.

The problem is not just technical capability, but reliability. An AI system might successfully complete a complex task 80% of the time, but the consequences of failure in the remaining 20% often make human oversight necessary. This creates another paradox: The most valuable applications for AI agents — those involving high-stakes decisions or complex multi-step processes — are precisely those where reliability requirements are highest and current systems are least dependable.

The final barrier involves persistent memory and personalization. Visionaries speak of AI assistants that will remember everything about their users, building deep relationships and providing increasingly personalized service over time. The reality is that most current AI systems are severely limited in maintaining context across sessions.

LLMs typically have short "context windows" — they can consider only a fixed amount of recent conversation history when generating responses. While these windows have expanded significantly in recent years, they remain fundamentally constrained by computational costs. Processing millions of words of conversation history for every interaction would be slow and prohibitively expensive.

Personalized AI assistance requires not just remembering facts, but understanding preferences, goals, and contexts that evolve over time. A human assistant learns not only that a person prefers morning meetings, but also when he's likely to be stressed about deadlines, which colleagues he works best with, and how his priorities shift between different projects. This kind of deep, contextual understanding requires the ability to identify patterns across months or years of interaction — something that goes far beyond current systems' capabilities.

The computational challenge of scaling such understanding to hundreds of millions of users is staggering. Even if we could create AI systems capable of truly understanding individual people, providing this level of personalization to everyone would require computational resources that might be economically unfeasible. Current AI models already consume enormous amounts of energy and computing power for relatively simple tasks; the resources needed for fully personalized intelligence at a global scale might be several orders of magnitude larger.

These barriers appear impenetrable, and yet AI developers are still attempting to break through them. In robotics, foundation models trained on vast datasets of robotic interactions are beginning to show improved performance on tasks involving physical manipulation. Companies like Physical Intelligence have raised hundreds of millions of dollars to build large-scale datasets of robot behavior, similarly to how LLMs were trained on massive text corpora. The logic is compelling: Just as language models improved dramatically with scale, robotic intelligence might follow a similar trajectory.

In the realm of AI agents, systems are becoming more capable at handling multi-step reasoning and tool use. Advances in "chain of thought" prompting and self-correction algorithms enable AI systems to break down complex problems and verify their own work. Some recent benchmarks show rapid improvement in agents' ability to complete longer tasks, with performance doubling roughly every seven months according to certain measures.

These advances, however, remain preliminary. Robotic foundation models work well in controlled laboratory settings but struggle with the variability of real-world environments. AI agents show improvement on benchmarks but often fail when deployed in production systems where reliability is crucial. Memory systems can recall relevant facts but lack the deep understanding of context and relationships that characterizes human memory.

Each of the four barriers presents a formidable challenge to reaching the point where computers can fully take over for humans. Advancements will likely be incremental as AI systems gradually become more capable in specific domains rather than achieving sudden breakthroughs toward general intelligence. Perhaps the most significant difficulty is that these barriers interact with each other in complex ways. True AGI would need to overcome all of them simultaneously — adapting to human organizations, operating reliably in the physical world, handling complicated long-term projects, and maintaining personalized relationships with individual users. The compound problem of solving all these challenges together might be greater than the sum of the individual parts.

This does not mean that current AI systems are unimportant or that improvements will be slow. Within each domain, AI capabilities continue to advance rapidly, creating enormous value even without achieving AGI. But it does suggest that the transformative changes many envision might unfold over decades rather than years, giving society more time to adapt to the implications of increasingly capable AI. And it implies that fears for our safety should be focused on other humans, not AI.

KNOW THYSELF

The classic folk song "John Henry" tells of a legendary black laborer in the 19th century who competes with a steam drill to construct a railroad tunnel and dies trying. The comic duo The Smothers Brothers created a different ending for the song, in which Henry says: "I'm gonna get me a steam drill, too, Lord/Lord, get me a steam drill, too."

The Smothers Brothers' adaptive alternative offers wisdom for how Americans might survive this new era of AI computing. Those workers who are able to collaborate with the new models will thrive. As explained above, AI assistants will work better with accurate "meta-instructions," meaning the user has to know what makes him good at something. The user has to be able to articulate: This is how I write; this is how I construct software; this is how I make a video. A good writer who cannot explain his process will be frustrated by models' inability to meet his own standards of composition. An average writer who can give good meta-instructions will be spectacularly more productive.

AI's widespread deployment could yield massive social and economic transformations, but we must keep in mind that we have not endowed computers with intelligence, and are unlikely to do so in the near future. The jobs of today and tomorrow will still need humans — their conceptual thinking, prudential judgment, contextual understanding, and emotional depth. Adapting well to the era of AI will require putting its character in context, and approaching its future with the humility to understand that no one can reliably predict what's coming.